HoG Face Detection with a Sliding Window

1.1 Extract positive and random negative features

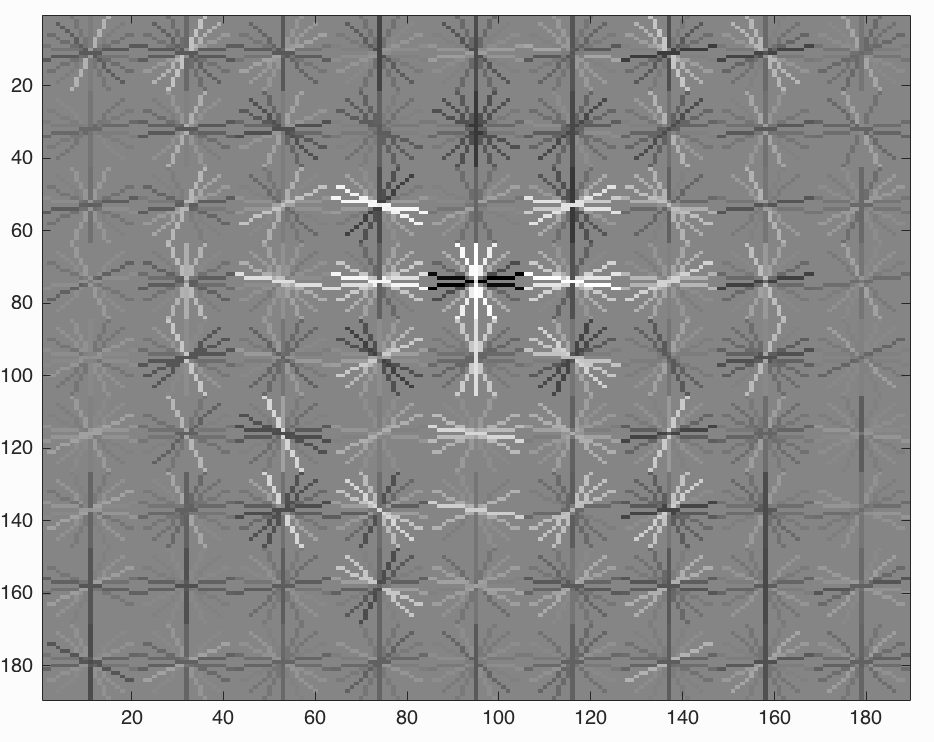

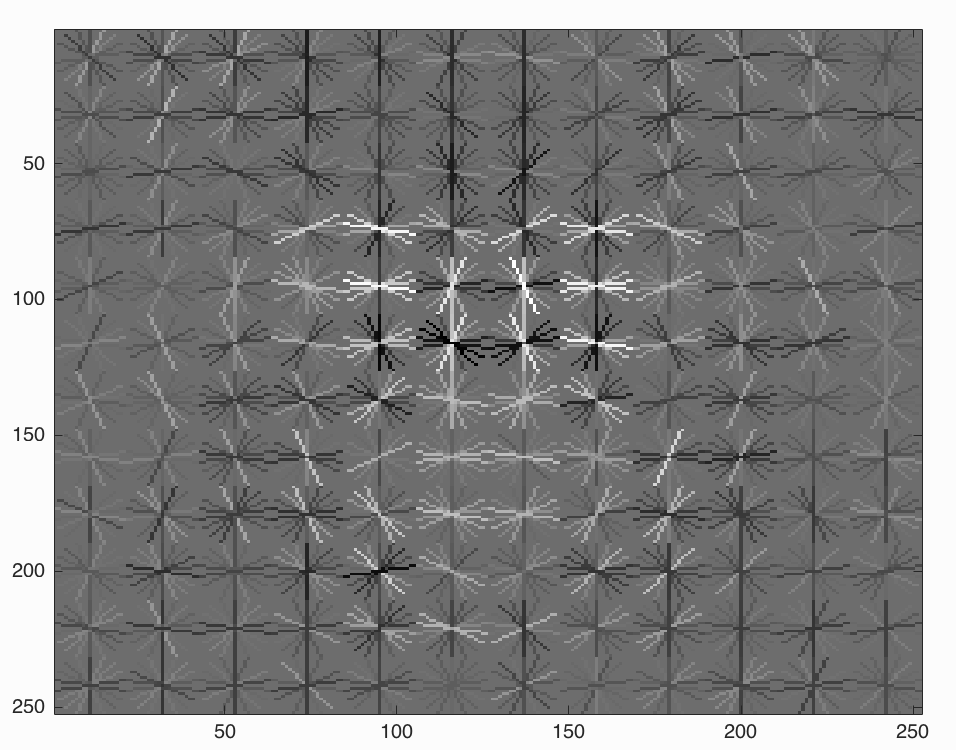

6,713 cropped 36x36 faces from Caltech Web Faces project and their reflected versions (in total 13436) are used as the positive data. For negative data, 36x36 patches were randomly sampled from multi-scales non-face scenes, sample number was finally set to 85000. Then I extracted HoG features from those patches with the hog_cell_size = 4 and hog_cell_size = 3 (finally decide to use 4). Corresponding HoG features are as follows.

1.2 Train SVM classifier

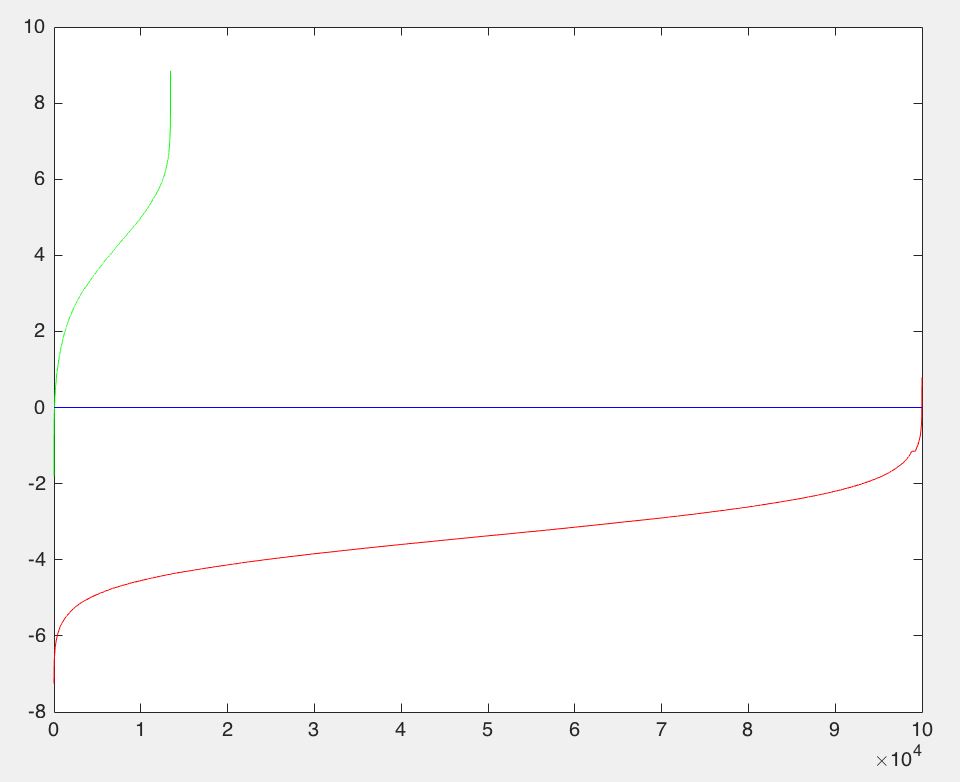

A linear SVM classifier is trained from positive and negative features, with lambda = 0.0001.

1.3 Hard negative mining

Applied the trained SVM again to non_face_scenes, and add patches those above the THRESHOLD (set as 0) as hard negatives (need scale to the correct template size). Hard negative mining is useful to remove false positives, but also remove some correct predictions.

1.4 Detect faces on test set

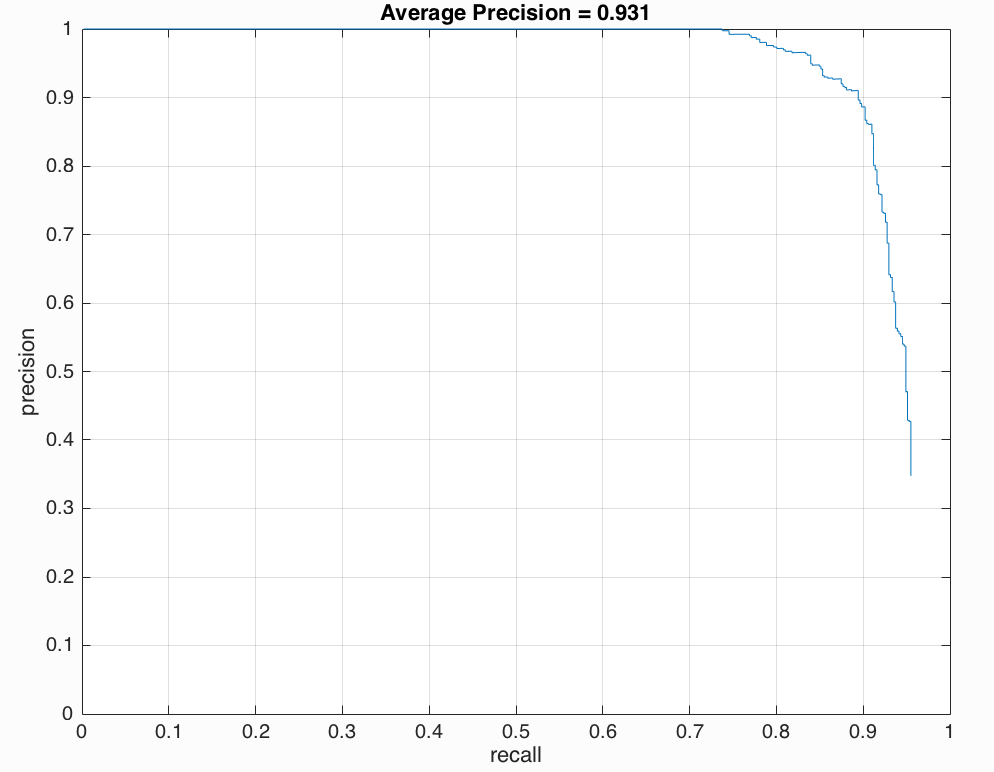

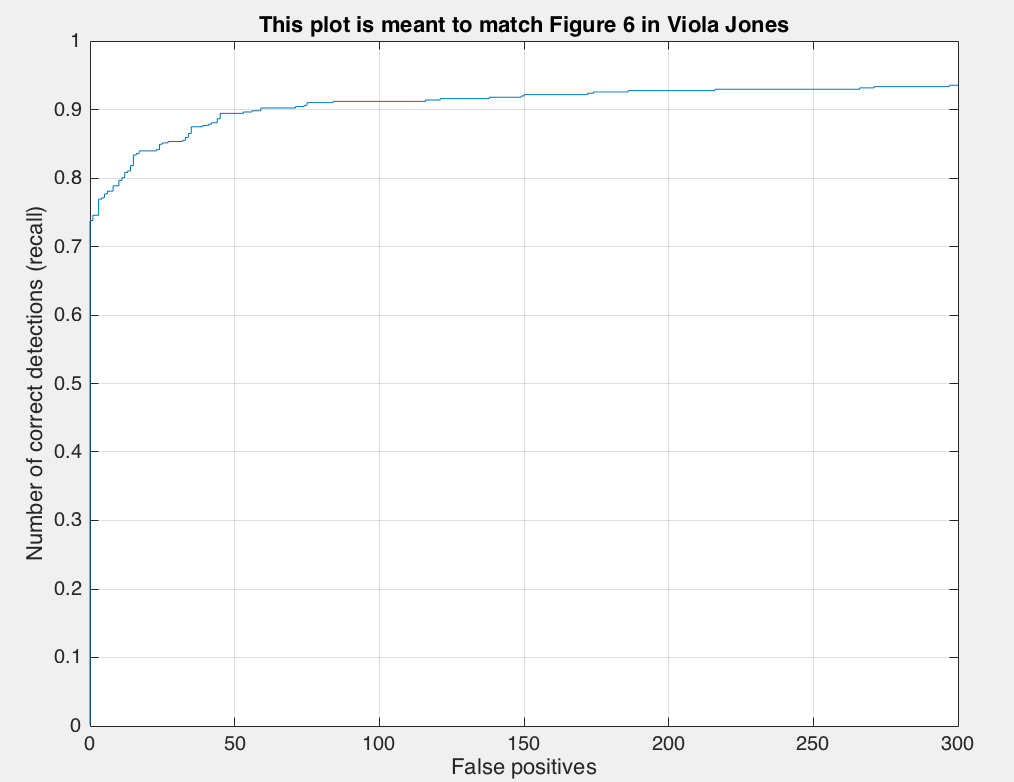

Run the new SVM on the provided test set. When detecting faces on multi-scales images, the image downsample rate is set to 0.9.

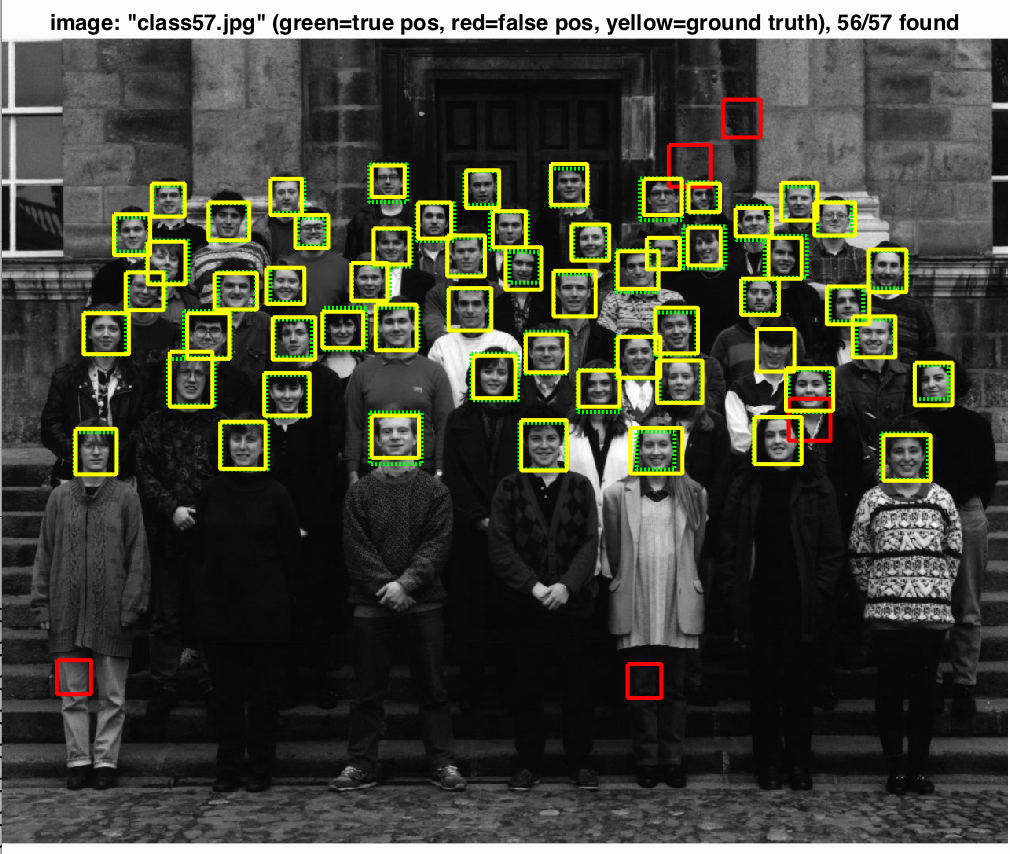

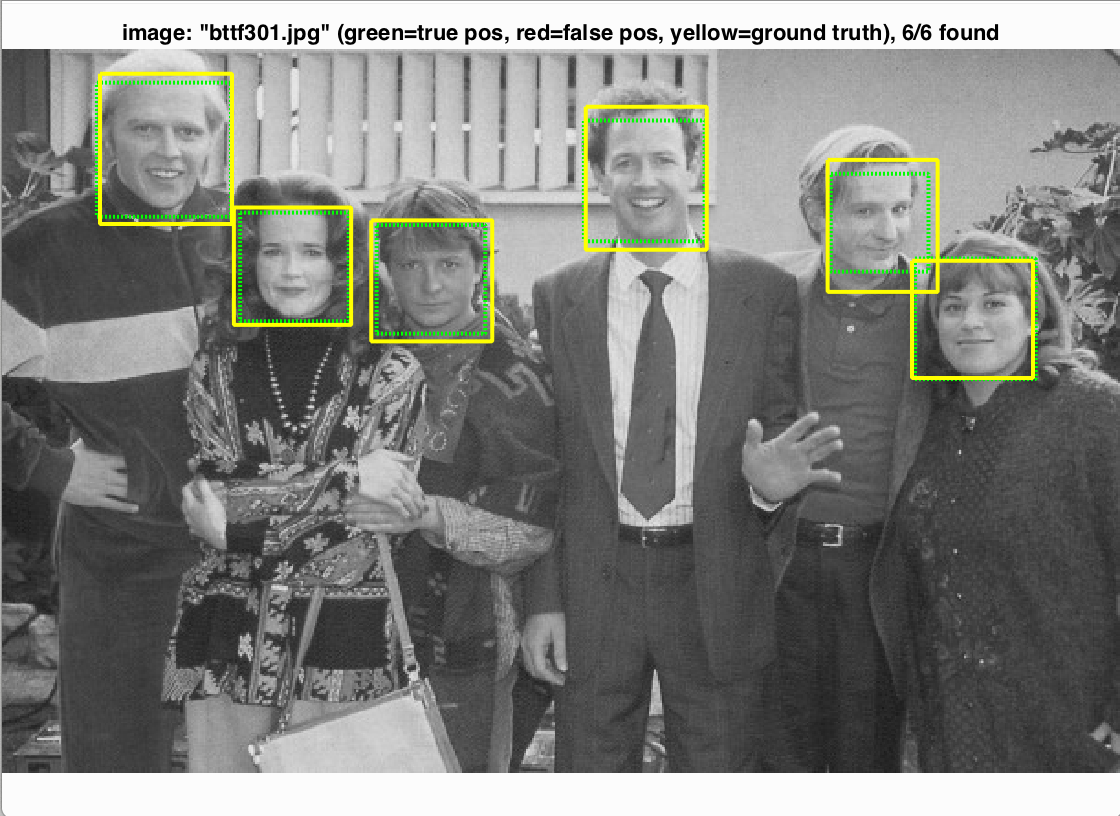

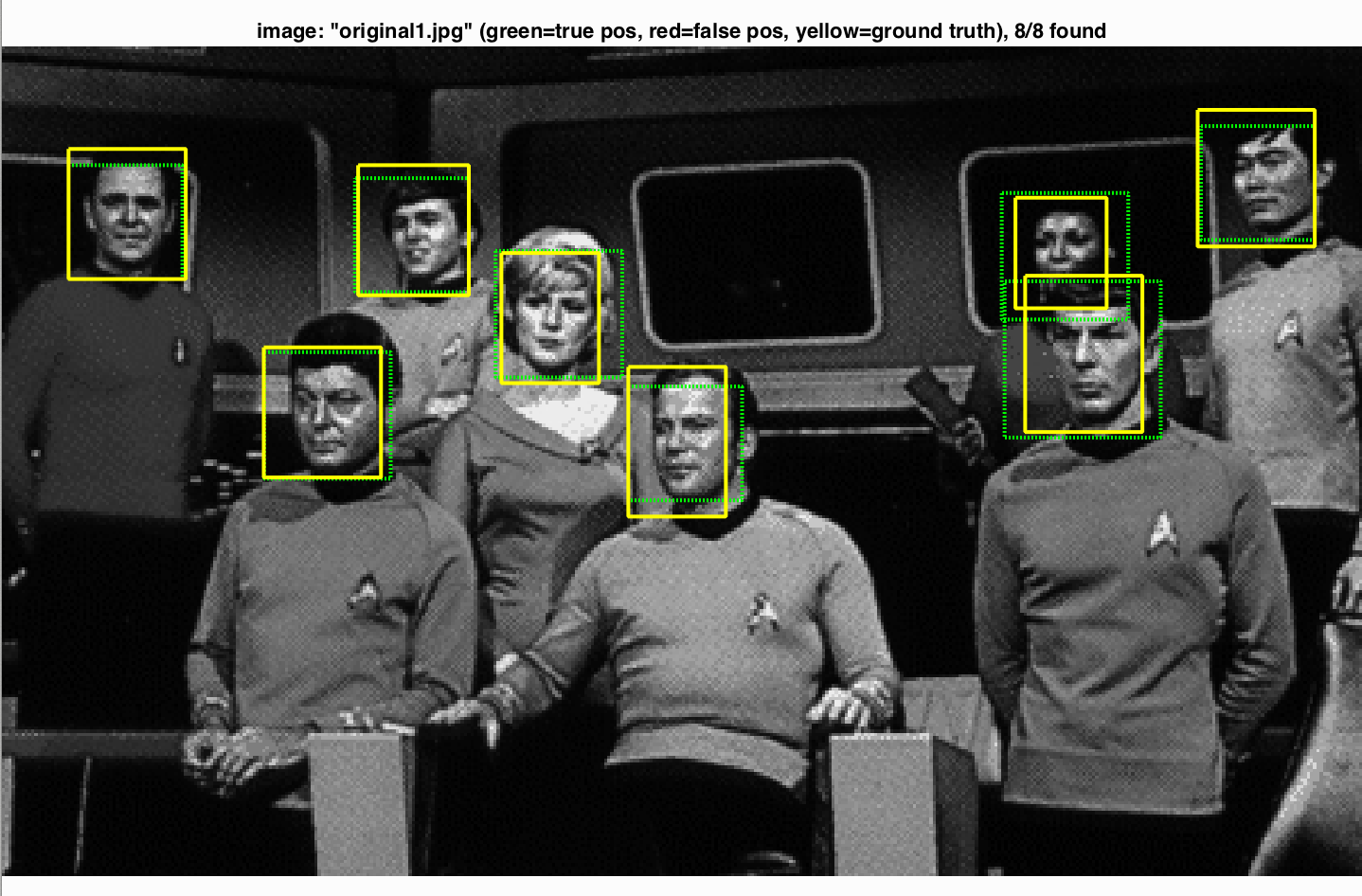

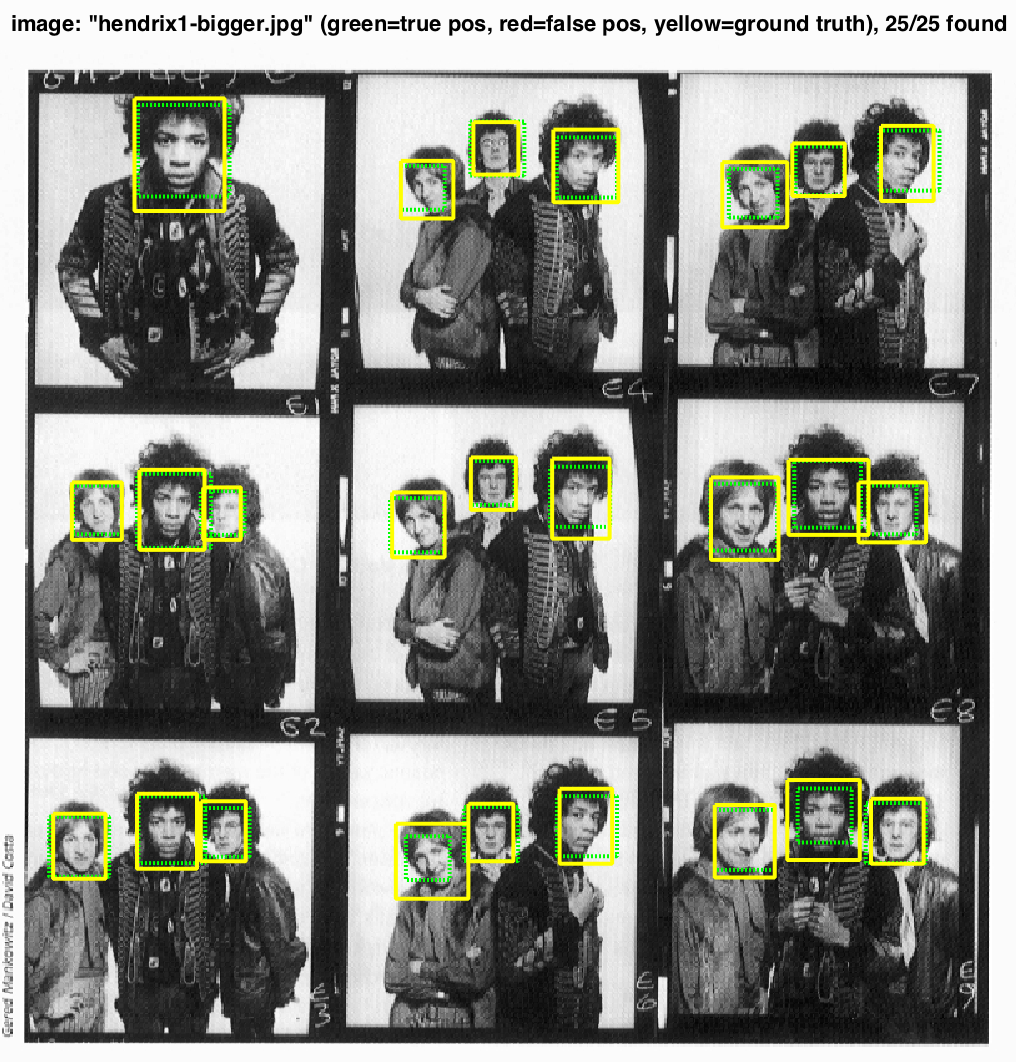

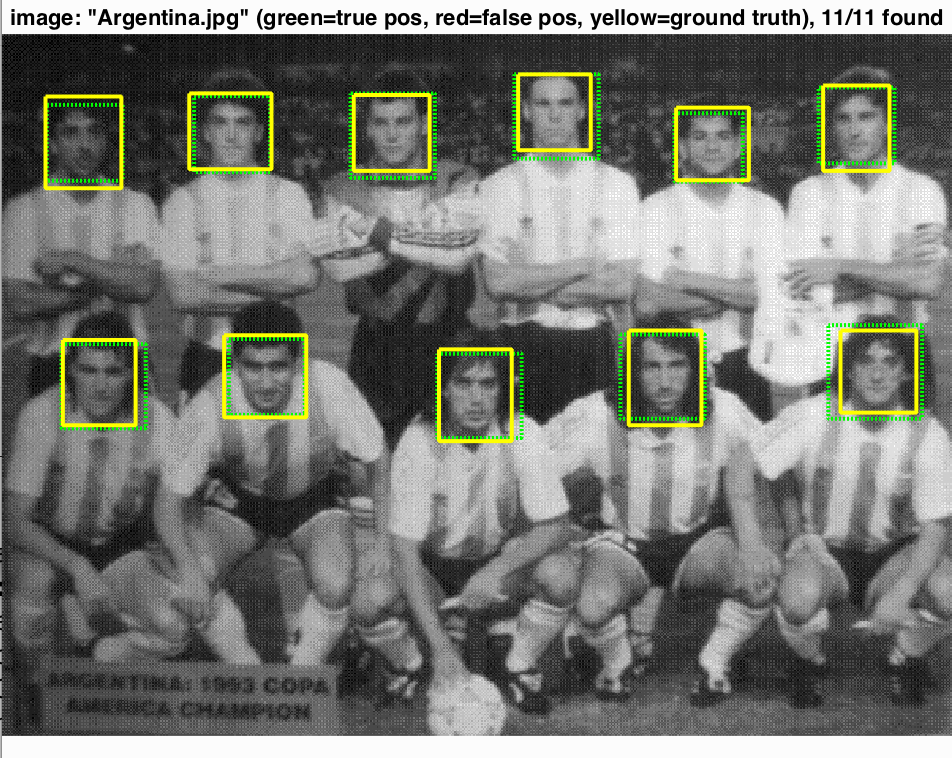

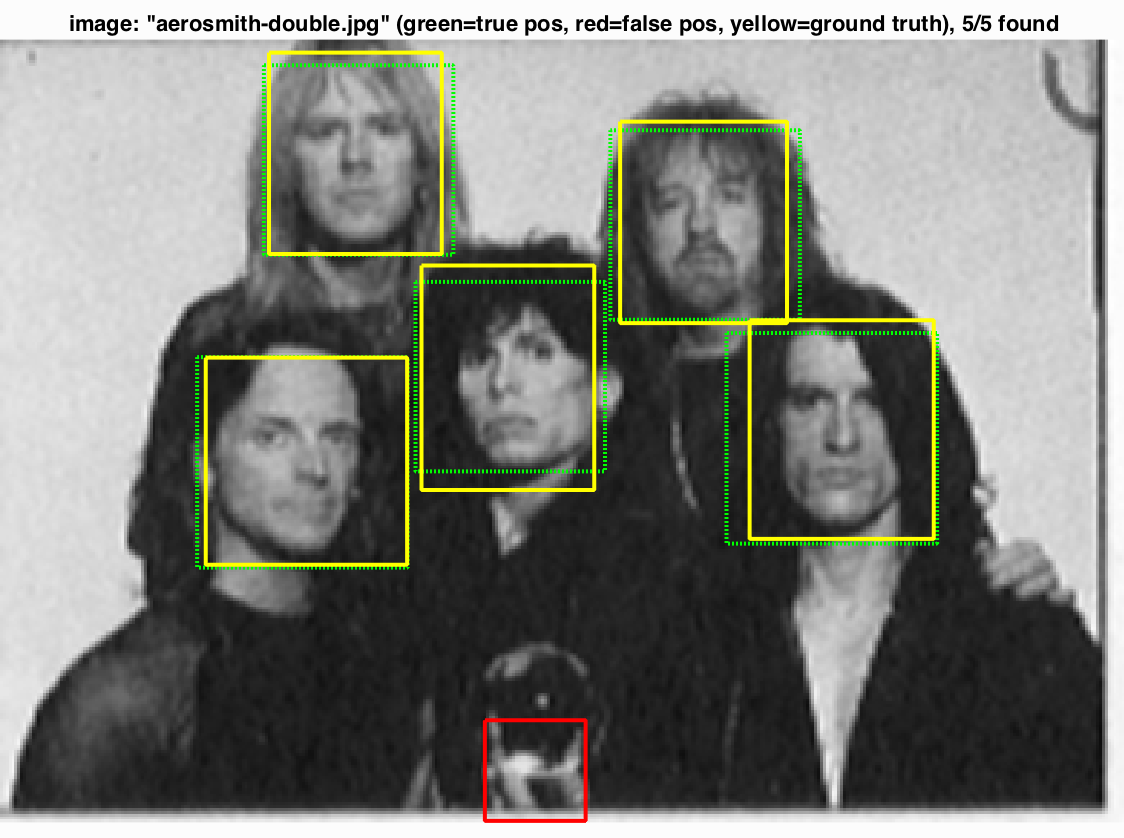

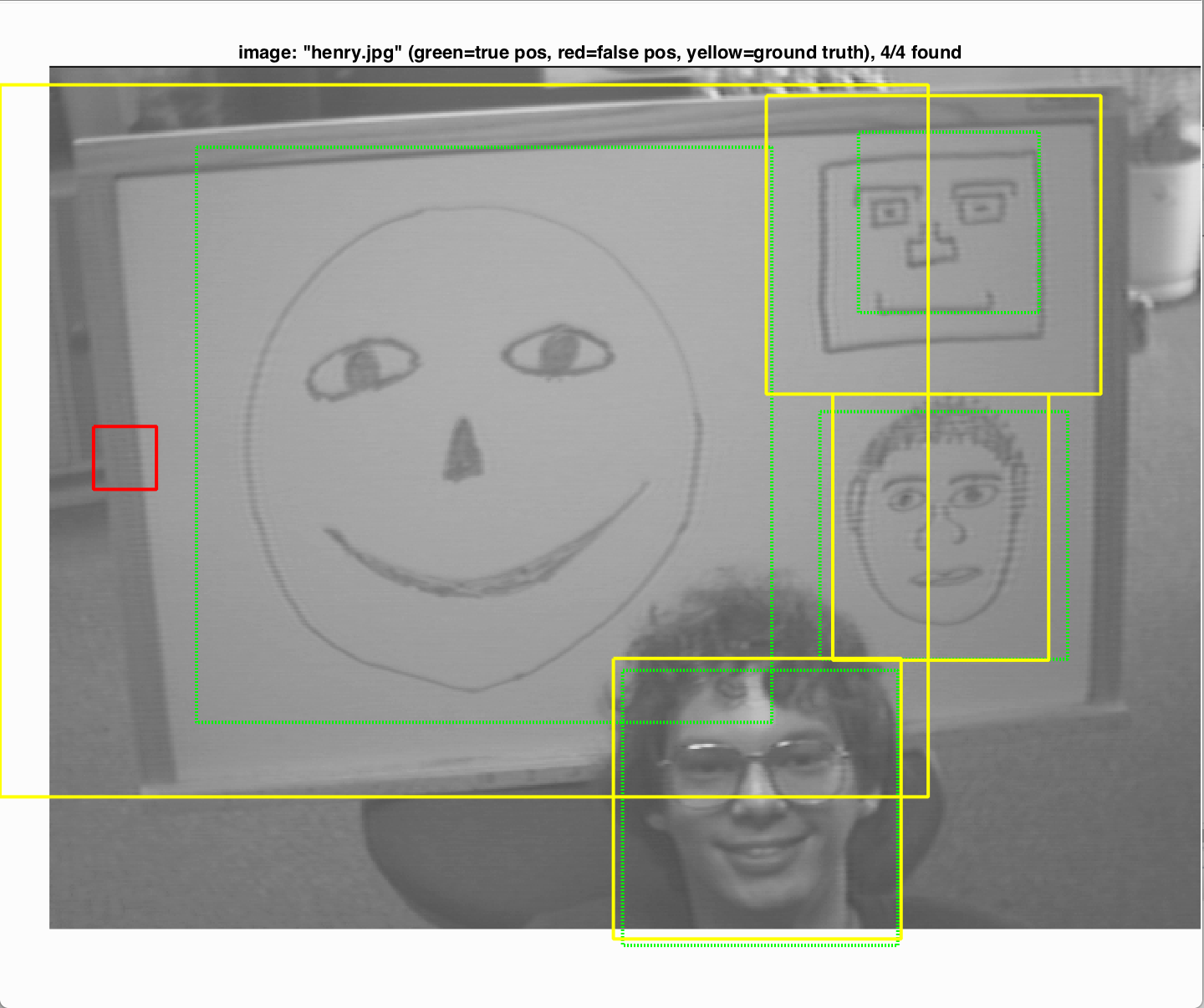

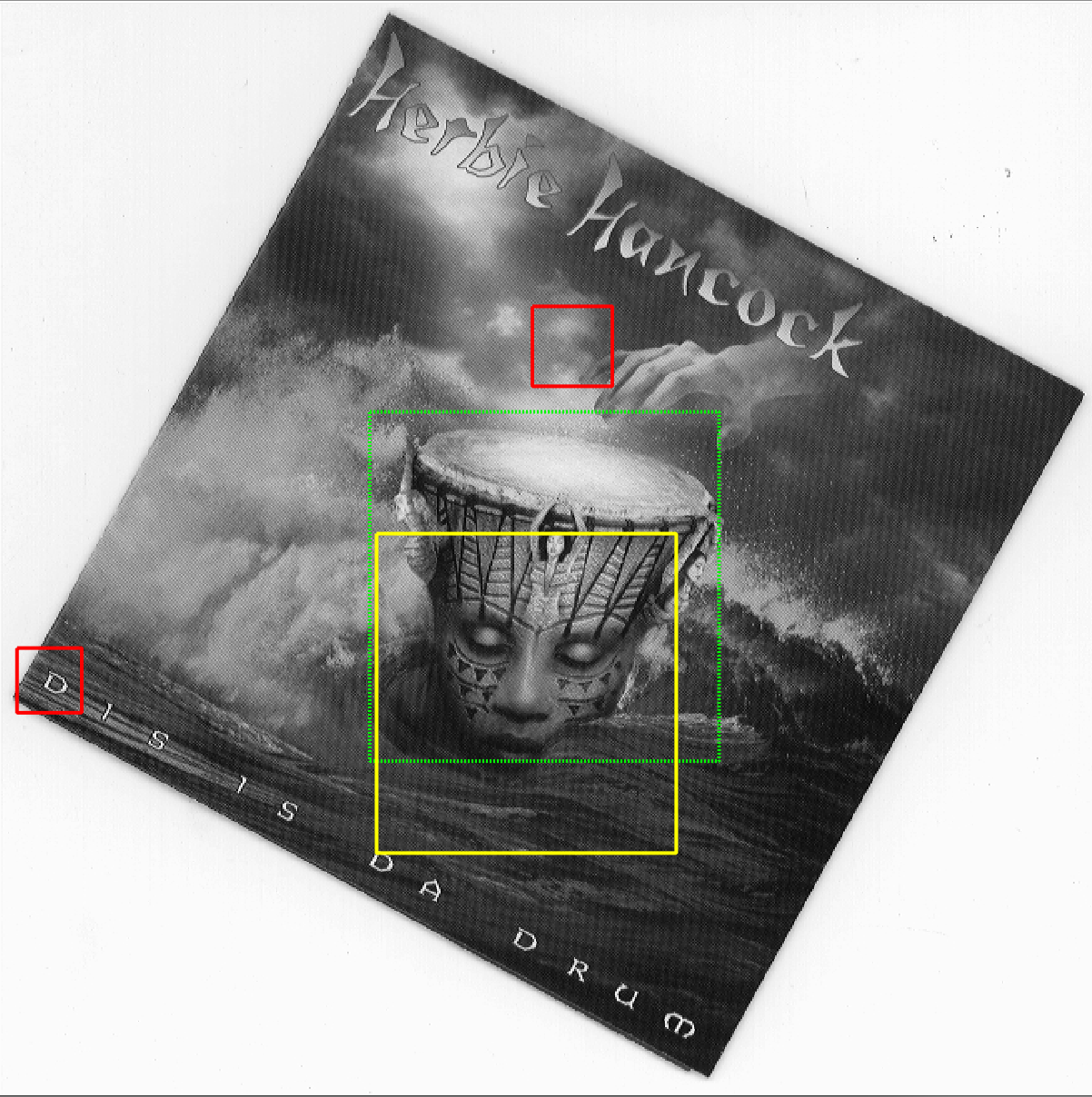

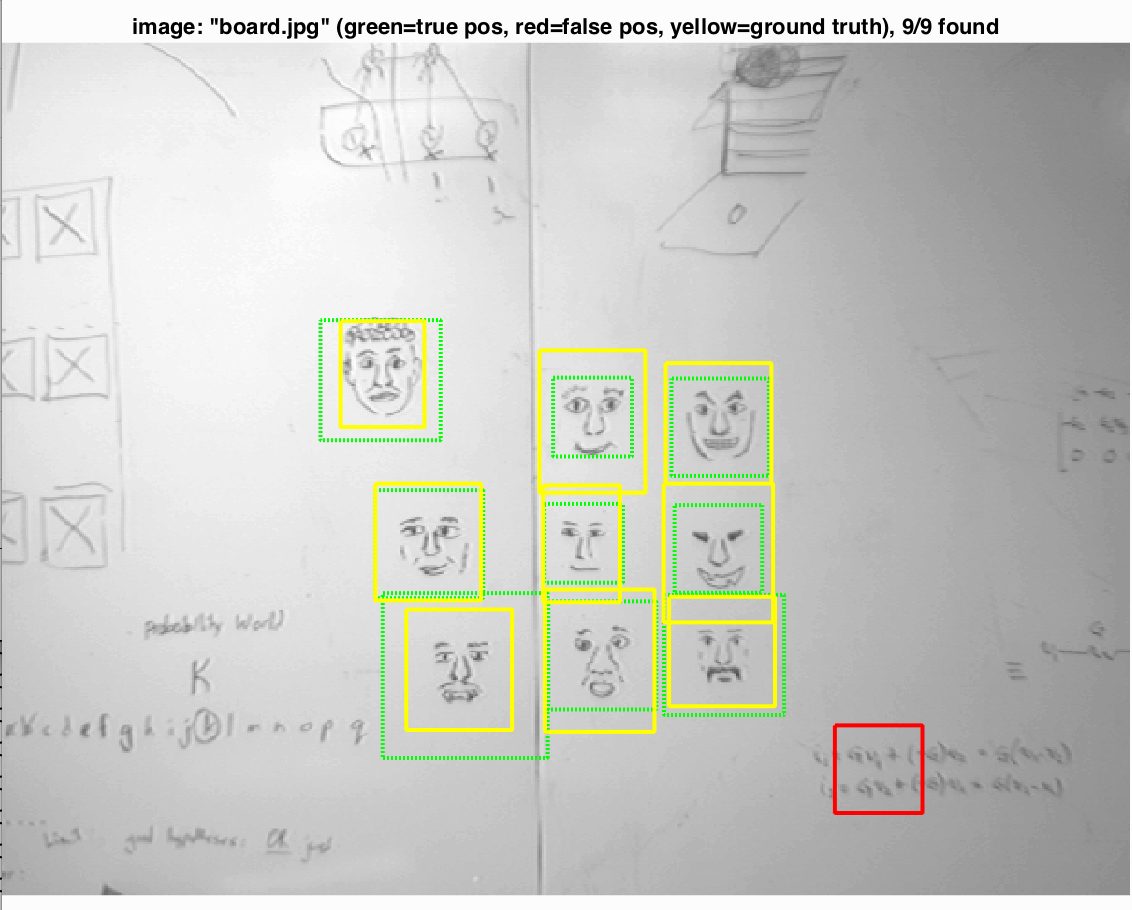

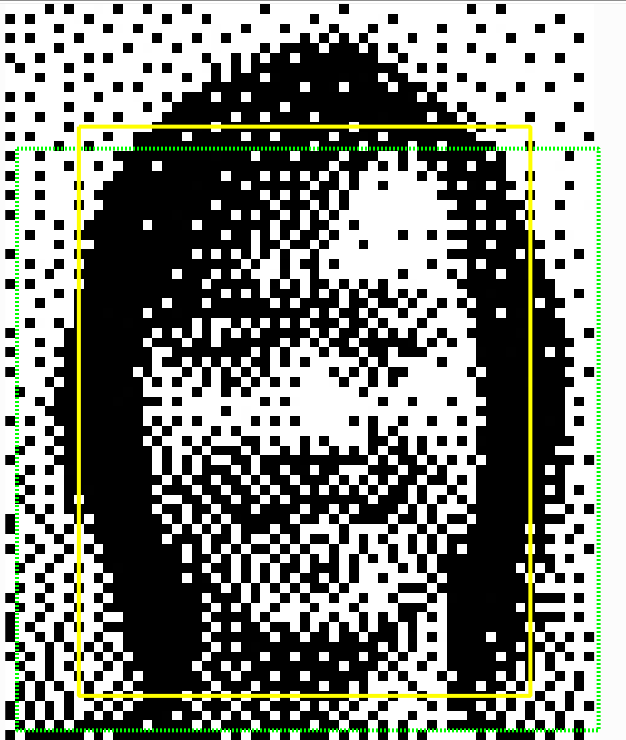

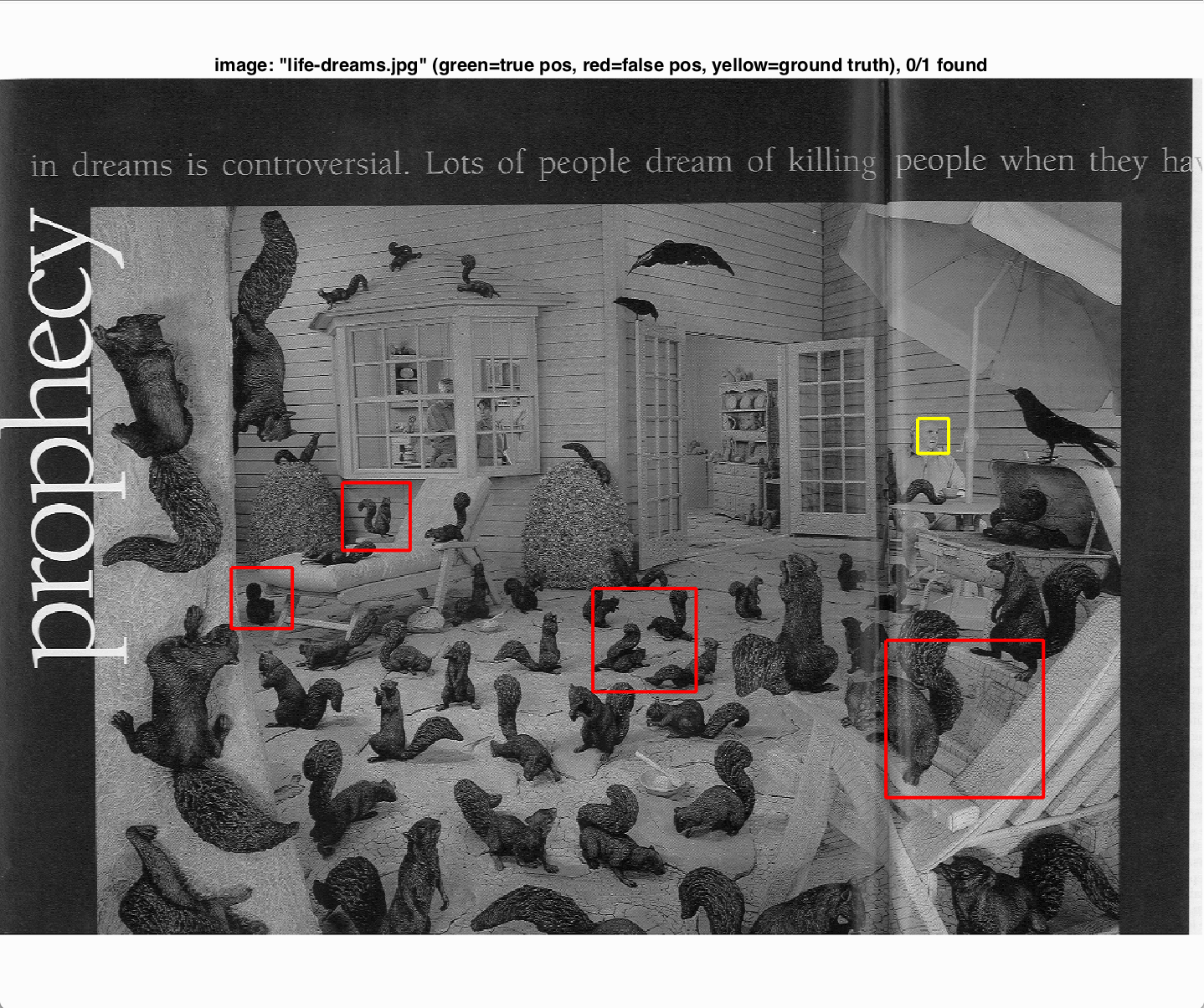

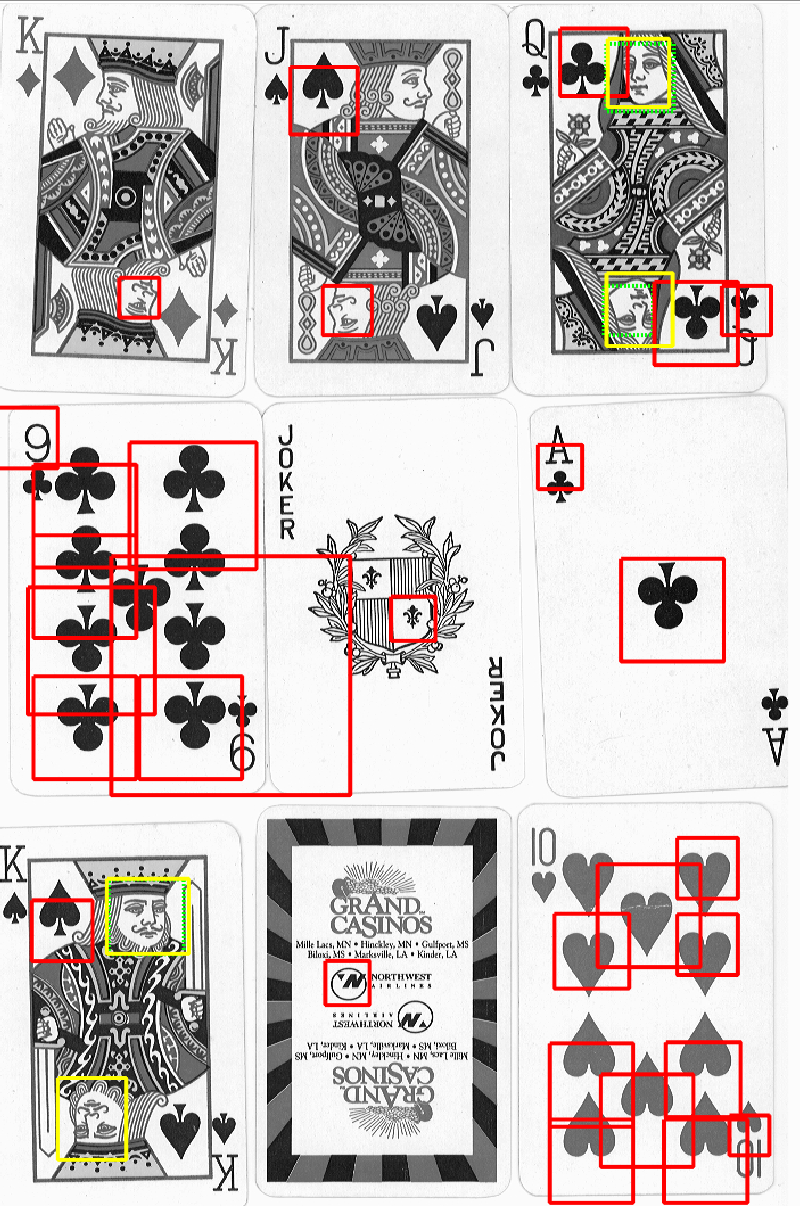

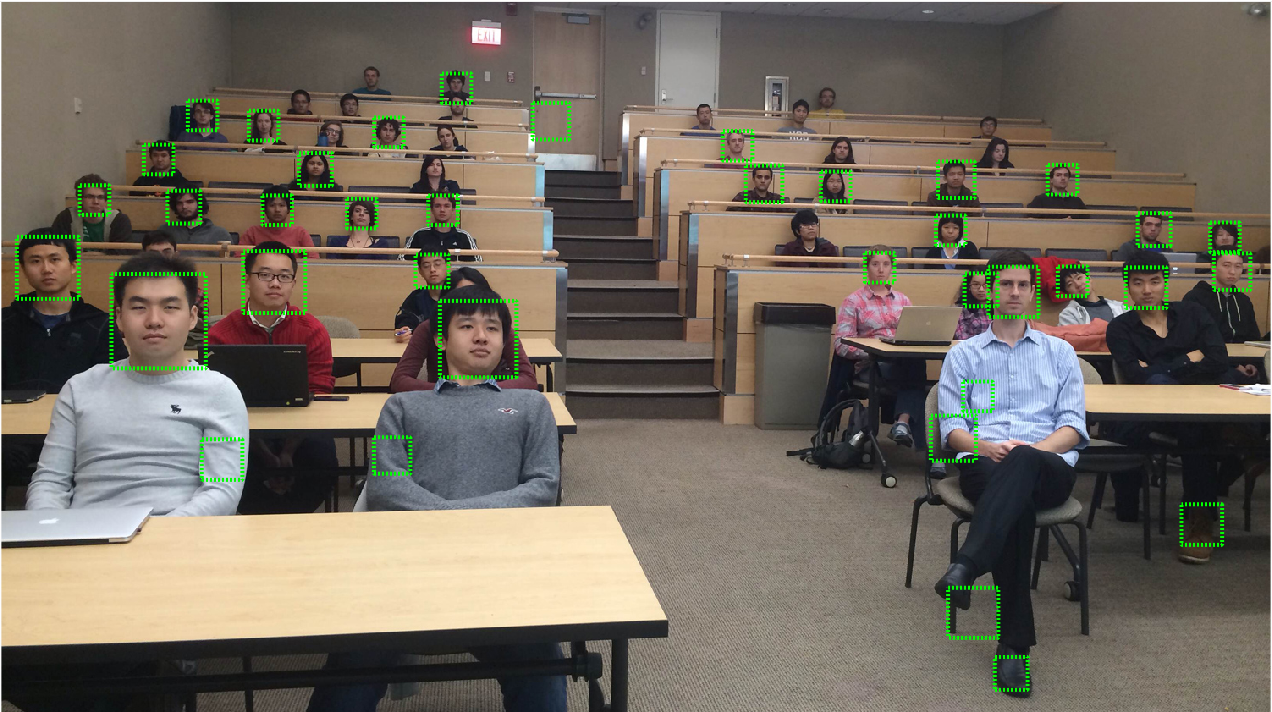

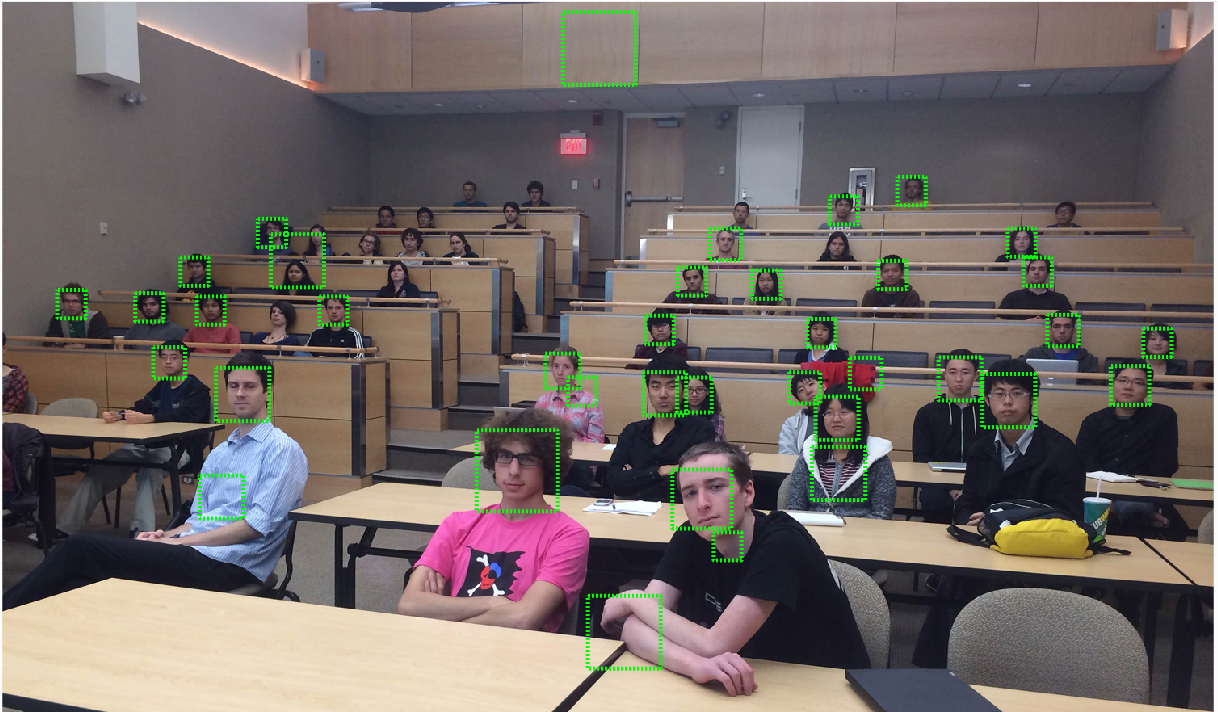

The images below show part of the face detection results: some good ones, some with non-real faces, processed face, and some extremely bad ones

Extra test results: